ARKit and ARCore bring immersive computing at scale. What we will build?

June 5, 2017 was the day that everything changed. During Apple’s 2017 World Wide Developer Conference, the company announced the beta availability of ARKit, the native software layer for blending digital objects with the real world. In the four short months since that announcement, ARKit has taken the world by storm, unleashing a series of developments that will not only put augmented reality at the forefront of mobile computing, but also provide new momentum for virtual reality. And just in time.

AR’s Long and Winding Road

Augmented Reality had been wandering in the wilderness for some years. The explosion of the smartphone market led to a crop of startups circa 2010 that brought the camera and location services together with innovative applications, such as Metaio and Layar. These types of ventures enjoyed mixed success, at best, getting acquired or pivoting, as the market was slow to materialize.

One notable exception is Vuforia, a supplier of AR middleware that spun out of Qualcomm and was purchased by CAD software giant PTC. Vuforia has continued to find purchase via a combination of enterprise and consumer applications, and powers tens of thousands of AR experiences today. But for years, even Vuforia’s AR was considered a “gimmick” for many applications, not an essential enabling layer of what many of us believe is the next step change in computing, the immersive interface.

Then along came VR.

Back In the Limelight

Looking back on the stratospheric rise of virtual reality since the Oculus Kickstarter five years ago, I am still amazed. I am obviously jaded about this space, having worked in it for over twenty years, so I was very skeptical about the possibilities when I first tried the DK1. It wasn’t just the low resolution, nausea-inducing tracking and insufferable form factor; those should have been enough to kill this thing in its infancy. No, I was more skeptical about the market, because I had been down this road before. I couldn’t imagine that consumers were ready for an immersive computing experience, because I had seen too many failures, both personal and industry wide, that were clear indications that the world was not ready for 3D.

Obviously, I was wrong about Oculus. Enough things had changed in recent years, apparently, that folks could see the potential in a fully immersive computing experience. Not just the tech industry, but consumers and customers. And so, game on: here we are, five years into the immersive computing revolution, thanks in large part to the Oculus Rift.

With the resurgence of consumer VR came a parallel renaissance in augmented reality. AR industry players wisely took advantage of the spotlight VR cast on immersive technology, and renewed their marketing efforts, riding on its coattails.

This doesn’t mean that AR was standing still that whole time. Pokémon GO and Snapchat filters shipped as mass-market consumer AR phenomena, followed by Facebook’s camera-based AR premiered at F8 this year. Also, Microsoft Hololens and Google Tango have been pushing the envelope on industrial AR hardware for several years. But I think it’s fair to say that these projects have enjoyed additional consumer awareness due to massive buzz that was building thanks to VR.

And right as AR stepped back into the limelight, ready for its closeup, along came ARKit.

Immersion at Scale

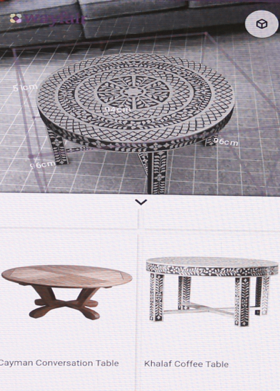

On the heels of ARKit’s release, Google announced ARCore, an Android API that brings a subset of Tango’s AR technology to consumer-grade android phones, notably Samsung S8 and Google’s Pixel line. ARCore is similar in features to ARKit, offering single-plane surface recognition, positional tracking — so you can blend true 3D graphics, not just sprites overlaid on your camera image — and light estimation.

ARKit and ARCore leave out some of AR’s more promising features, some of which require additional hardware support, such as environment reconstruction (aka SLAM). But it’s reasonable to think that, over time, those core technologies will migrate into newer generations of mobile devices. In the interim, smart software libraries like Vuforia can fill the gap with a combination of on-device APIs and cloud-based services (not to mention much-needed provide cross-platform capability between the two operating system APIs).

While the feature sets for ARKit and ARCore don’t run the gamut of what many of us would like to see for “true” AR, this is a significant development nonetheless. Because with these two systems, we now have a baseline AR capability that spans the two most popular mobile ecosystems, and reaches nearly half a billion devices.

And Apple isn’t standing still. Today, the world changed again with the company’s press event about the upcoming iPhones. iOS 11 ships on September 19th, and with it, the ability to deliver AR to between 300 and 400 million phones. The iPhone 8, coming out on September 22nd, has even tighter integration among the components that run AR. And the iPhone X, previewed today, comes with Face ID, based on a TrueDepth camera system that has a depth camera, IR camera, flood illuminator, dot projector, and an on-device neural network on a chip (the A11 bionic chip… what?) — the better to recognize you and only you, securely. The same face tracking tech is also used to drive true 3D AR filters as well as animojis— cartoon characters that speak for you in your instant messages.

It’s safe to say that Apple appears to be doubling down on its AR bet.

Now that the big guys have stepped into AR with full force, the world has taken notice. We’re seeing a huge wave of interest in AR from game developers, storytellers, brand creatives, and enterprise application developers. It’s fair to say that we could be approaching a tipping point for immersive development that we hadn’t seen with VR, because now AR promises scale, based on the phone in your pocket, with no need to strap anything funky on your head.

VR Will Have Its Day (Again)

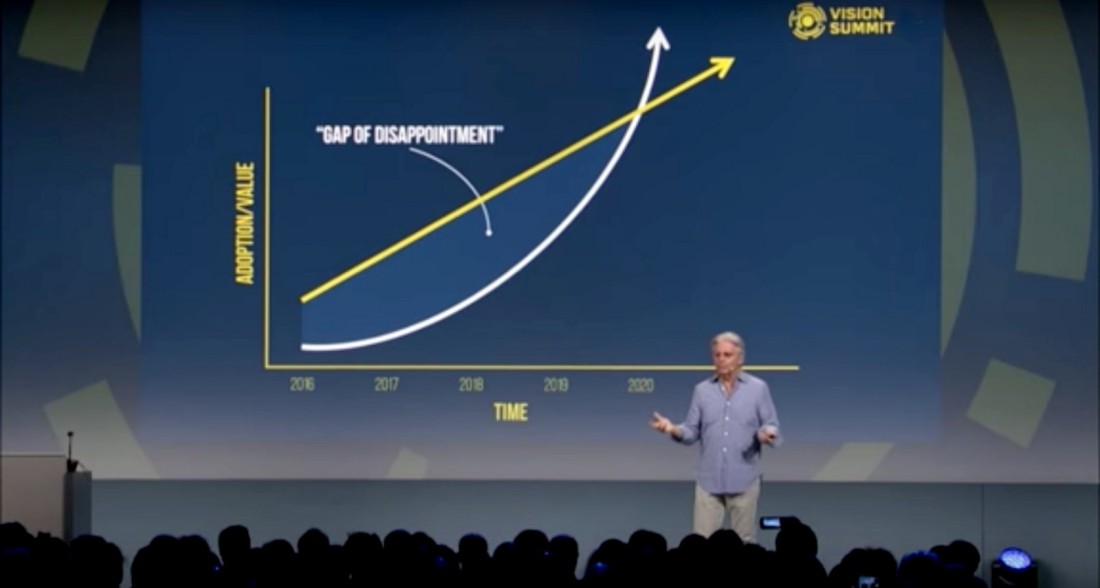

This reinvigorated interest in AR comes at a time when VR is sputtering a bit. Many of us knew that mass-market adoption of VR was going to take a while. Unity’s CEO (and my boss) John Riccitiello spelled this out in a keynote address at the Vision Summit in 2016, a talk which has come to be known as his “gap of disappointment” speech. The basic idea was that, while we’re bullish on the long-term growth of XR, we know that things are going to take longer than a lot of folks expect.

John also did the keynote at this year’s VRLA conference, and his talk there featured a slightly more upbeat, updated version of this concept. John laid out what he thinks it’s going to take, specifically, to get there with mass adoption of XR. Have a look if you haven’t seen it.

The biggest takeaway from this talk is that we need two critical things to hit mass scale: 1) a total cost of ownership of under 1,000 dollars, and 2) a couple of hundred million units shipped.

VR is nowhere near that yet. While costs are quickly dropping, we are generously at 10 million units (not counting Google Cardboard; but that’s a debate for another day).

ARKit and ARCore, on the other hand, get us to that mass scale in one stroke.

Now, John wasn’t talking about AR in that particular keynote. He was focused on VR. But these two technologies occupy a spectrum of immersion. At the highest level, VR, AR and headset-based mixed reality are all about immersive 3D graphics. Yes, there are some points of divergence. But in general, it’s real time 3D objects, environments and characters viewed in an immersive 360 degree environment. The skills you need to build for one translate well to the other, and consumers will become more and more ready, even expectant, for interactive 3D content regardless of its delivery medium. And of course, you can use Unity to build for all of them. Mwahah…

The recent big developments in AR threaten to steal VR’s thunder. We are already seeing a bit of an exodus by developers attracted by the lure of scale and the potential for monetization that it brings. Despite VR offering a more complete and compelling trip, the user base for AR is two orders of magnitude higher. Why invest time and energy into creating something that can be seen by 10 million people, when you can put that same energy (actually, quite a bit less in most cases… AR content tends to be much less complex) into making something for an audience of half a billion? It’s hard to argue with those numbers.

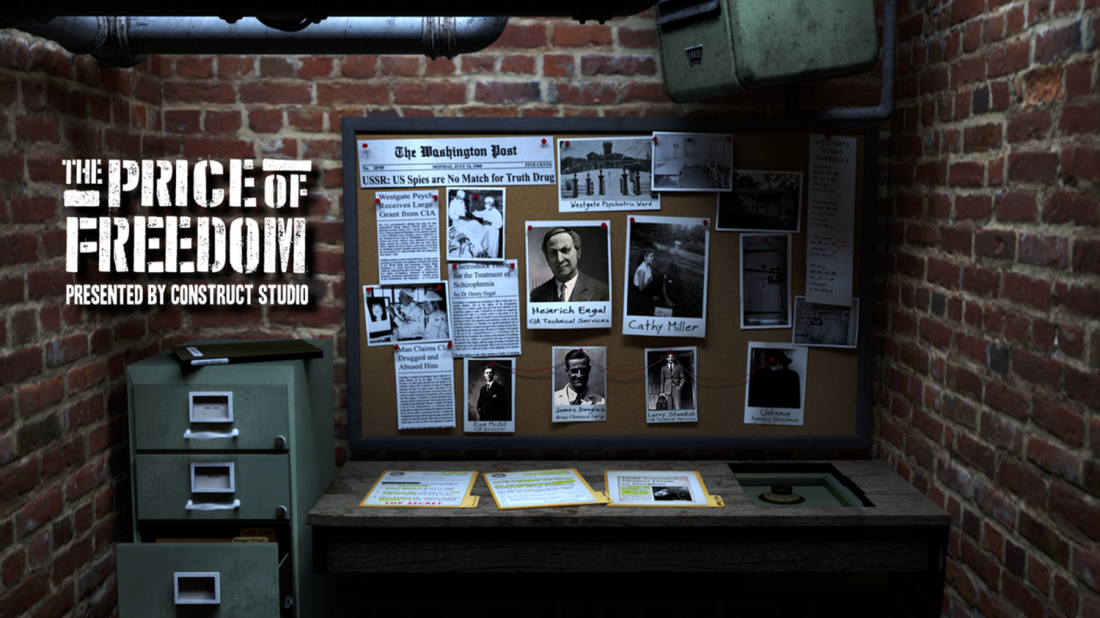

But I am guessing this is simply a stage in the ongoing development of immersive computing. In the balance, I think that it’s going to be a good thing for AR to get the attention for the next little while. VR needs more time to get the pricing and form factors right. But there’s no replacement for the sheer joy of completely enveloping yourself — head, hands and body — into an experience that takes you to another place. VR enables magical realms that AR can’t, by offering complete escape. The pendulum may swing to AR for a while, but there are so many great use cases for VR that I believe it will have its day again, and the fresh energy that AR brings to the world of immersive computing should give it enough lift to keep VR rolling too.